Google's A2UI standard moves AI agents from text chat to dynamic native UIs.

Google’s open-source protocol sends declarative JSON to instantly generate safe, native user interfaces.

December 20, 2025

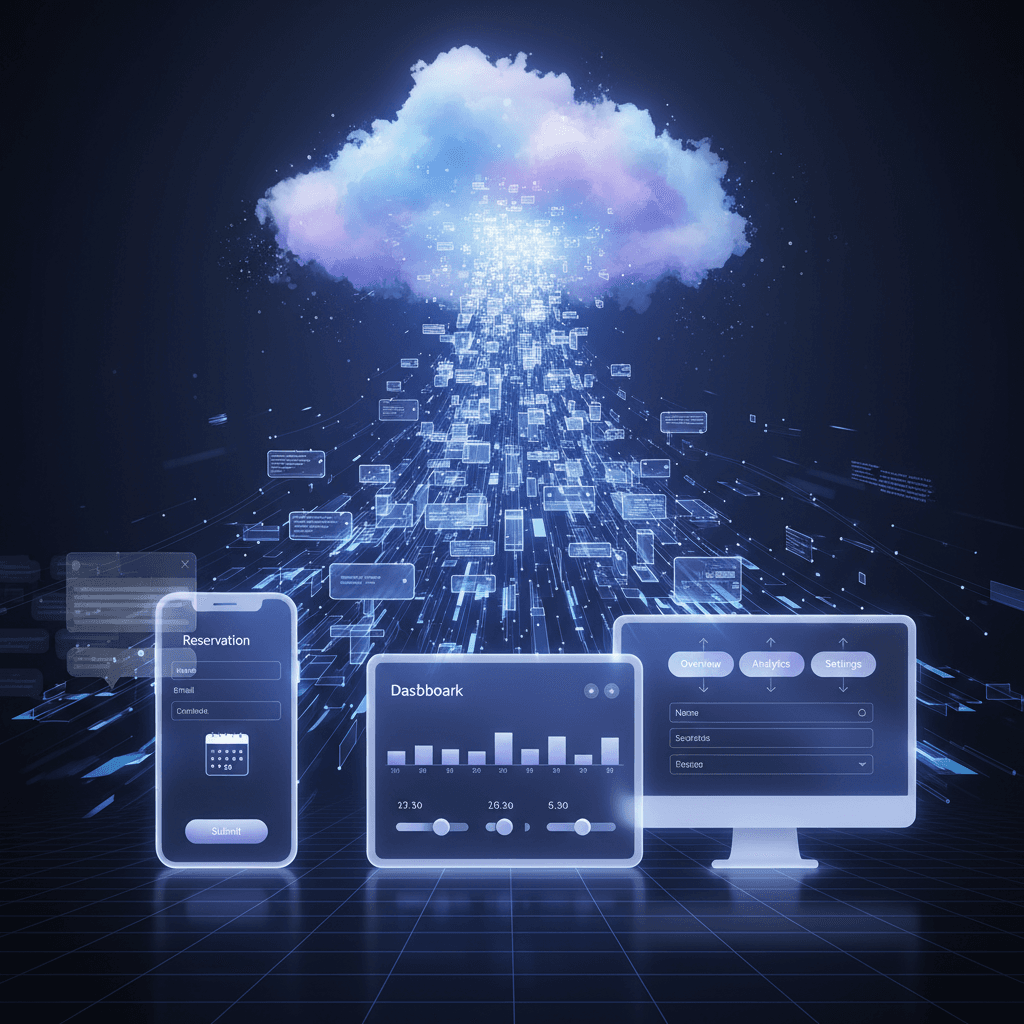

Google's introduction of the Agent-to-User Interface, or A2UI, is poised to fundamentally redefine the interaction model between users and sophisticated AI agents, moving beyond the confines of text-only chat windows to embrace dynamically generated, rich graphical interfaces. This new open-source standard provides a universal language for AI agents to communicate the need for buttons, forms, charts, and other complex UI elements on the fly, allowing large language models to not just respond with words, but with a fully interactive experience that blends seamlessly into any host application. The project addresses a critical bottleneck in the evolution of AI—the difficulty for remote, decentralized agents to securely and contextually generate a front-end interface that feels native to the application it is being used in.

At its technical core, A2UI operates on a principle of sending data, not executable code. This is a deliberate and crucial design choice that separates it from earlier, less secure methods where AI agents might have been tasked with generating full HTML or JavaScript to be rendered within sandboxes or iframes. Instead, the A2UI protocol is a declarative JSON format that an AI agent transmits to a client application. This JSON payload describes the *structure* of the desired UI, such as a Card, a Button, or a DatePicker, along with the data to populate it. The client application, which already possesses a "trusted components catalog" of pre-approved UI widgets, interprets this description and renders the elements using its own native components. This mechanism ensures two vital outcomes: security is maintained because the agent cannot inject arbitrary, malicious code, and visual consistency is guaranteed because the resulting interface automatically inherits the host application's established design system, avoiding the "janky" appearance common with sandboxed third-party UIs. The protocol is also designed to be LLM-friendly, utilizing a flat component list with ID references that facilitates incremental generation, allowing for progressive rendering and a responsive user experience as the AI agent formulates its response.[1][2][3][4][5]

The implications of this declarative, native-first approach are profound for both security and user experience across the AI industry. In the multi-agent mesh—an emerging architectural paradigm where complex tasks are orchestrated by several specialized AI agents collaborating remotely—an agent's ability to influence the end-user's interface has traditionally been severely limited. For example, asking an AI agent to book a restaurant table typically results in a slow, error-prone text exchange, with the agent sequentially prompting the user for date, time, and party size. With A2UI, the same request can instantly prompt the generation of a complete, context-aware reservation form, pre-filled with the information provided so far, featuring a native date picker, time selector, and a submit button. This shift from conversational turn-taking to instantaneous, graphical task completion dramatically improves usability and efficiency. Furthermore, the inherent security of the A2UI model—by restricting the agent to a client-defined catalog of safe components—makes it feasible for enterprises to confidently deploy powerful, generative agents in critical workflows without fear of UI injection vulnerabilities or cross-site scripting (XSS) attacks. This security-first philosophy is a key enabler for widespread adoption in enterprise applications and across different trust boundaries.[2][4][6][7][8]

Google's decision to make A2UI an open-source project signals an intent to establish a ubiquitous, platform-agnostic standard in the rapidly emerging agentic UI space. The standard is designed to be cross-platform, with initial renderers and integrations available for major frameworks including Flutter (via its GenUI SDK), Angular, and Web Components, underscoring its versatility across web, mobile, and desktop environments. This open approach immediately positions A2UI in a competitive landscape that also includes other evolving protocols. Anthropic's Model Context Protocol (MCP) and related projects like MCP Apps have been influential, often taking a web-centric view where UI is treated as a sandboxed resource, typically prefab HTML. Similarly, OpenAI's Apps SDK and related tools are primarily optimized for its own ecosystem. A2UI differentiates itself by prioritizing deep, native integration and cross-platform portability. By cleanly separating the UI structure from its implementation, the same JSON payload generated by a remote AI agent can be correctly and beautifully rendered in a variety of front-end technologies, allowing developers to maintain their brand's identity and UX consistency regardless of the underlying agent or client platform. The early adoption by frameworks like CopilotKit further solidifies A2UI's role as a specialized protocol focused on the specific, complex challenge of interoperable, generative UI responses, complementing rather than replacing existing frameworks.[2][9][10][4][5]

The launch of A2UI represents more than a technical update; it is a foundational step toward the era of generative interfaces, where the user experience is not static but dynamically constructed by AI based on the context of the user's need. By standardizing the communication protocol for these agent-driven interfaces, Google is providing the essential scaffolding for developers to move from simple chat responses to constructing rich, interactive applications that adapt in real-time. This open standard is set to accelerate the development of sophisticated, task-specific AI agents that can seamlessly integrate into existing software, transforming user-agent interaction from a clunky, text-based conversation into a smooth, visually-guided experience, thereby ushering in a new and more powerful generation of intelligent software design.[2][8][11]

Sources

[3]

[5]

[6]

[7]

[10]

[11]