DeepMind's Genie 3 AI Creates Consistent, Interactive 3D Worlds for AGI

Google's Genie 3 generates interactive, consistent 3D worlds with dynamic events, accelerating the pursuit of artificial general intelligence.

August 5, 2025

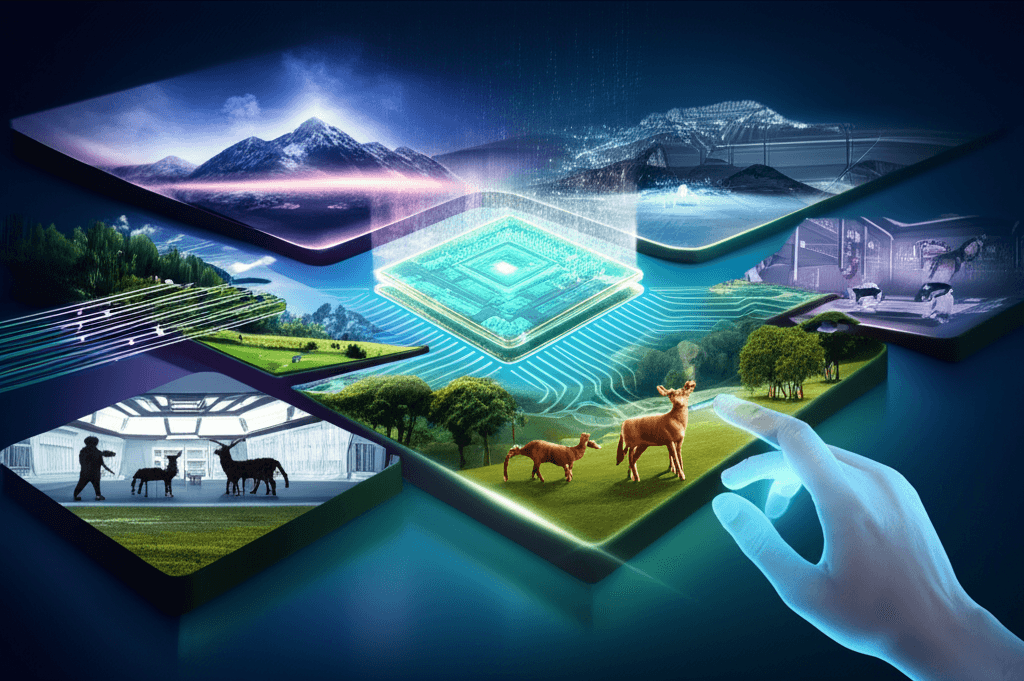

Google's AI research division, DeepMind, has unveiled Genie 3, a sophisticated world model capable of generating interactive, three-dimensional environments in real time from a simple text prompt. This development is being positioned as a significant advancement in the pursuit of artificial general intelligence (AGI), the hypothetical ability of an AI to understand or learn any intellectual task that a human being can. Genie 3 demonstrates a marked improvement over its predecessors and competitors by creating more consistent, detailed, and dynamic virtual worlds, which are crucial for training advanced AI agents. The model can generate these interactive environments at 720p resolution and a fluid 24 frames per second, allowing for several minutes of coherent exploration. This is a substantial leap from the 10 to 20 seconds of interaction offered by its forerunner, Genie 2.[1][2][3][4]

The core innovation of Genie 3 lies in its ability to create general-purpose worlds that are not restricted to specific, pre-defined environments.[1] This is a critical step towards building AI systems that can operate and solve problems in the complexity of the real world. According to DeepMind, world models like Genie 3 are a key stepping stone on the path to AGI because they provide an unlimited curriculum of rich simulation environments for training AI agents.[5] The potential applications are vast, ranging from training robots and autonomous vehicles in realistic virtual settings to creating dynamic and responsive content for the entertainment and gaming industries.[6][7] By simulating a wide array of scenarios and challenges, these models can accelerate the development of more robust and adaptable AI systems without the risks and costs associated with real-world training.

A standout feature of Genie 3 is its emergent capability for maintaining physical consistency over extended periods.[1] The model can remember the placement of objects and environmental details even when they are off-screen for up to a minute, a significant challenge for previous generative models.[2] This ability was not explicitly programmed into the model but arose from its training on vast amounts of video data.[1][7] Furthermore, Genie 3 introduces "promptable world events," allowing users to dynamically alter the simulation with new text commands after the initial world has been generated.[2][4] For example, a user exploring a generated mountain scene could introduce a herd of deer with a simple text prompt, and the model would integrate this new element in real-time.[4] This transforms the generated environment from a static space into a responsive and editable one, opening up new possibilities for interactive experiences and complex simulations.

The technical architecture of Genie 3 is autoregressive, a design that contributes to its ability to generate consistent and evolving worlds.[2] It learns to simulate physical properties and interactions by observing patterns in video data, rather than relying on hard-coded physics engines.[1] This self-teaching method is a departure from traditional simulation approaches and is a key factor in the model's ability to generate a diverse range of interactive environments. This approach also places Google in direct competition with other major players in the AI field, such as Meta, which is developing its own world models for applications like robotics.[2] The underlying philosophy shared across the industry is that for an AI to act reliably in the physical world, it must first develop an accurate internal simulation of reality to "think" and plan within.[2]

While Genie 3 represents a significant leap forward, DeepMind researchers acknowledge that there is still a long way to go in the field of world model development.[2] Currently, access to Genie 3 is limited to a select group of academic researchers and creative professionals to foster further research and explore its creative potential.[3] The feedback and discoveries from this initial group will be crucial in guiding the future development of this technology. The long-term vision is to create AI agents that can be trained in these rich, simulated worlds to perform a wide range of general-purpose tasks, bringing the industry closer to the ambitious goal of AGI.[1] The continued progress in models like Genie 3 signals a future where the line between the real and the simulated blurs, with profound implications for how we train AI and how we interact with digital content.