Context Engineering Emerges as AI's New Standard for LLM Control

From single queries to system design: "Context engineering" defines the new era of sophisticated AI application building.

June 28, 2025

A consensus is emerging among top minds in the artificial intelligence industry that the future of interacting with large language models (LLMs) lies not in the clever phrasing of a single query, but in the comprehensive construction of a rich informational environment. Shopify CEO Tobi Lütke and former OpenAI researcher Andrej Karpathy have both championed the term "context engineering" as a more accurate and powerful successor to "prompt engineering." This shift in terminology reflects a deeper, structural change in how developers are building sophisticated AI applications, moving beyond simple commands to designing entire systems that feed LLMs the necessary data to perform complex tasks reliably.

For the past few years, "prompt engineering" has been the popular term for the skill of crafting effective inputs for generative AI models.[1][2] It involves carefully choosing words, structuring questions, and providing examples to guide a model toward a desired output.[1] This approach, however, is often associated with the user-facing, one-shot interactions seen in public chatbots.[3][4] While valuable, this view of prompting is limited. It focuses on the art of the single question, a method that often proves insufficient for building robust, production-level AI systems that require consistency and accuracy. The term itself has also been criticized for being a "laughably pretentious term for typing things into a chatbot," according to technologist Simon Willison, which has diluted its intended meaning.[5]

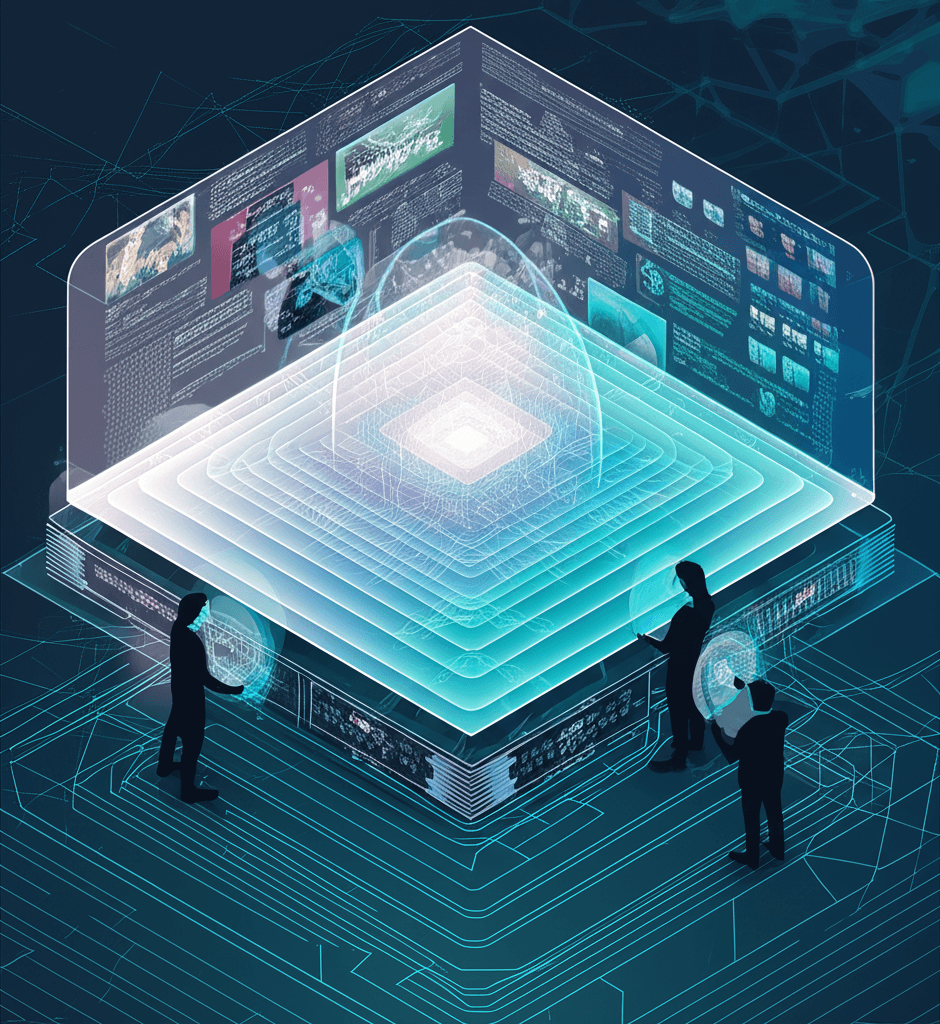

Context engineering, by contrast, is a developer-facing discipline focused on building the entire informational backdrop for an LLM.[3] As Lütke puts it, it is "the art of providing all the context for the task to be plausibly solvable by the LLM."[3] This goes far beyond a single, well-crafted sentence. It involves systematically designing and managing what enters the model's "context window," which Karpathy analogizes to a computer's RAM or working memory.[6] This process is a delicate blend of art and science, requiring engineers to pack this limited window with just the right information. This can include task descriptions, few-shot examples (a small number of demonstrations of the task), data from retrieval-augmented generation (RAG) systems, user history, tool specifications, and other relevant data, which can even be multimodal.[5][4] The goal is to create a dynamic system that curates, compresses, and sequences the right inputs at the right time, transforming the interaction from a simple query into a comprehensive briefing.[3]

The implications of this shift from prompt to context engineering are significant for the AI industry. It signals a maturation from building "ChatGPT wrappers" to developing complete, intelligent systems.[4] As Karpathy explains, context engineering is just one piece of a much larger puzzle that includes breaking down problems into manageable steps, dispatching tasks to the most appropriate LLM, and managing complex control flows.[4] This requires a more sophisticated skill set from AI engineers, moving beyond the clever manipulation of natural language to include system design, data architecture, and an intuitive understanding of a model's "psychology."[5][3] For businesses, this means investing in teams that can build these complex, context-rich applications. It’s no longer enough to have employees who are good at writing prompts; companies need engineers who can construct the entire scaffolding that makes an AI's performance reliable and scalable.[7][8]

Ultimately, the rise of context engineering is a direct response to the increasing complexity and capability of large language models. While a simple prompt might be enough to generate a creative poem, solving a multi-step business problem requires a foundation of well-structured information. The focus is shifting from the magic of the model to the engineering of the environment it operates in. As these models become more integrated into critical applications, the ability to reliably guide their reasoning through meticulously engineered context will be the defining characteristic of successful AI implementation. The debate isn't just about semantics; it represents a fundamental evolution in how we build with and understand artificial intelligence, moving from asking questions to providing solutions.[4][9]