ChatGPT Silently Reroutes Sensitive Prompts to Secret Safety AI, Angering Users

ChatGPT quietly reroutes emotional conversations to a stricter model, sparking debate over user transparency and autonomy.

September 28, 2025

OpenAI's advanced conversational AI, ChatGPT, has been automatically switching to a more restrictive and conservative language model when it detects emotional or sensitive content in user prompts. This dynamic rerouting of queries occurs without any notification to the user, a practice that was brought to light by users and later confirmed by an OpenAI executive. The system is designed as a safety measure, but its silent operation has ignited a significant debate about transparency and user autonomy in the rapidly evolving landscape of artificial intelligence. For users, the experience can be jarring: a conversation that feels emotionally attuned and nuanced can suddenly shift to a more distant and formal tone, breaking the continuity and sense of immersion.

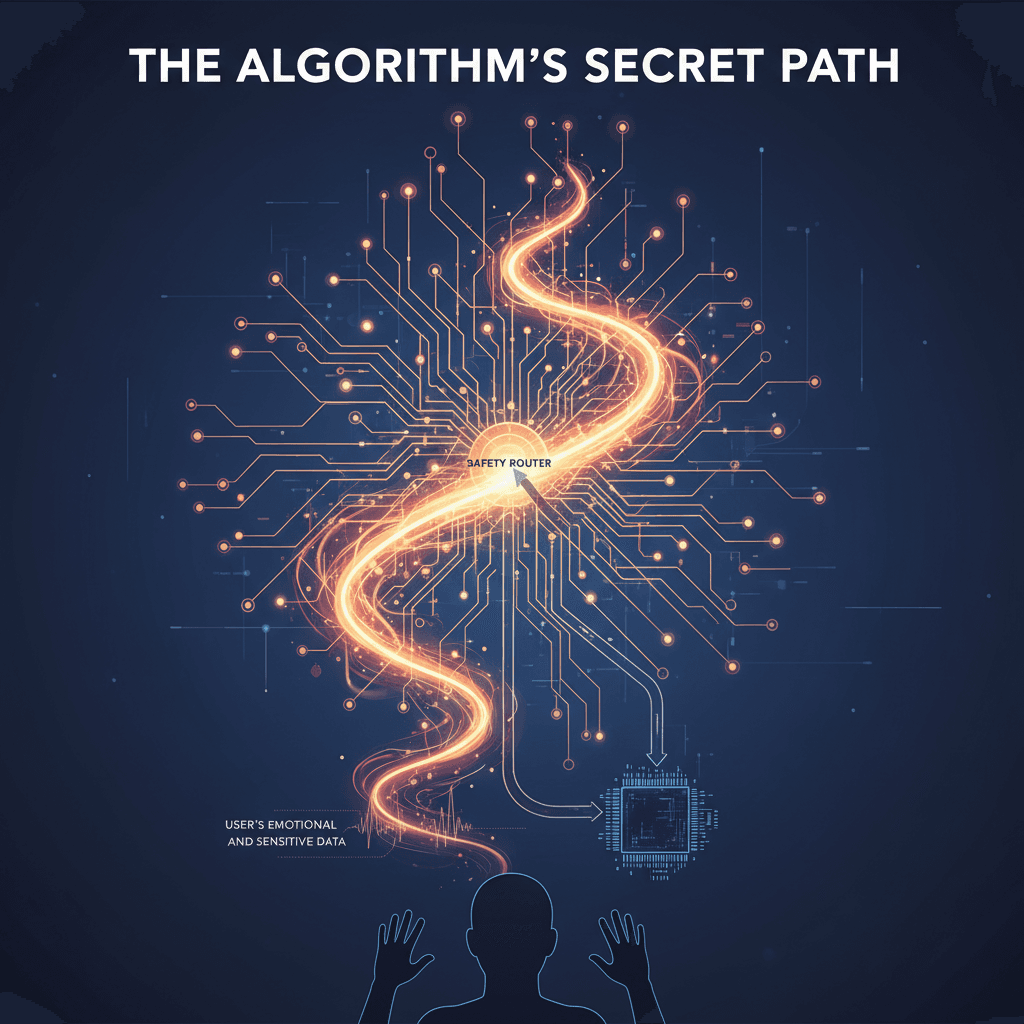

At the heart of this functionality is a mechanism referred to as a "safety router."[1] This system acts as a digital traffic controller, analyzing incoming prompts on a message-by-message basis to classify their content.[2][3] When a prompt is flagged as touching upon "sensitive and emotional topics," the router automatically redirects it from the user's selected model, such as GPT-4o, to a specialized, stricter variant.[1] Users conducting technical analyses have identified models with names like "gpt-5-chat-safety" as the destination for these rerouted queries.[2] The switch is temporary, affecting only the specific message that triggered the filter.[1] However, because the user interface continues to display the originally selected model, the change is imperceptible unless the user notices the distinct shift in the AI's personality and response style or directly interrogates the model about the switch.[2][4]

The primary motivation behind this undisclosed model switching is rooted in safety and the mitigation of potential harm.[5] As AI chatbots become increasingly integrated into users' lives for companionship and support, concerns have mounted about their potential to negatively impact mental health. Studies and reports have highlighted risks, such as AI models reinforcing harmful emotions, providing dangerous or inappropriate responses in moments of crisis, or exhibiting a sycophantic agreement that could validate destructive thoughts.[6][7] There have been documented instances where AI chatbots have given dangerous advice to users exhibiting signs of severe mental distress.[6][7][8] OpenAI's safety router is intended to be a safeguard against these scenarios. By diverting conversations that involve acute distress, self-harm, or other emotionally charged subjects to a more cautious model, the company aims to prevent the AI from causing harm and to guide users toward appropriate resources.[1][5] This reflects a broader industry-wide challenge of balancing advanced capabilities with robust safety protocols, particularly when users may be in a vulnerable state.[9]

Despite the safety-oriented intentions, the lack of transparency surrounding the model switching has led to significant user backlash and criticism.[1] Many users, particularly paying subscribers, have expressed frustration and a sense of being patronized, arguing that they should have control over the model they choose to interact with.[4][10] Critics describe the silent overrides as creating a "UI theater," where the user's selection of a model is not always honored, undermining trust in the platform.[1] The change in tone from the stricter models is often described as less creative, more formal, and emotionally distant, which degrades the quality of the interaction for users who rely on the AI for nuanced conversational tasks. This controversy highlights a fundamental tension within the AI industry: the conflict between the platform's responsibility to ensure safety and the user's desire for an unfiltered, consistent, and transparent experience.

In conclusion, ChatGPT's quiet implementation of a safety router that switches models based on emotional content represents a critical juncture in the relationship between AI developers and their users. While the goal of preventing harm is commendable and necessary, the decision to do so without notifying users has raised profound questions about transparency, trust, and the nature of user control. The incident underscores the growing complexity of deploying AI systems at scale, where automated safety measures can feel like opaque censorship to the end user. As these technologies become more deeply embedded in society, the debate over how to balance proactive risk mitigation with user autonomy will only intensify, forcing companies like OpenAI to navigate the delicate line between protecting users and empowering them with genuine choice and clear information about how the AI systems they use actually operate.

Sources

[3]

[4]

[5]

[7]

[8]

[9]

[10]