AMD launches full-stack AI arsenal, challenging rivals from cloud to PC.

AMD cements its AI Everywhere strategy, launching MI400 data center GPUs and 60 TOPS Ryzen AI processors.

January 6, 2026

The semiconductor landscape continues to be redefined by artificial intelligence, a trend powerfully underscored by chipmaker AMD’s sweeping announcements at the Consumer Electronics Show, CES 2026. The company’s presentation served as a comprehensive outline of its "AI Everywhere" strategy, launching a fresh wave of client processors for the burgeoning AI PC market while simultaneously unveiling the next generation of high-performance AI accelerators designed to power the world’s most demanding data centers. These simultaneous product launches signal AMD's intent to solidify its position as a full-stack AI compute provider, challenging competitors from the cloud to the consumer device.

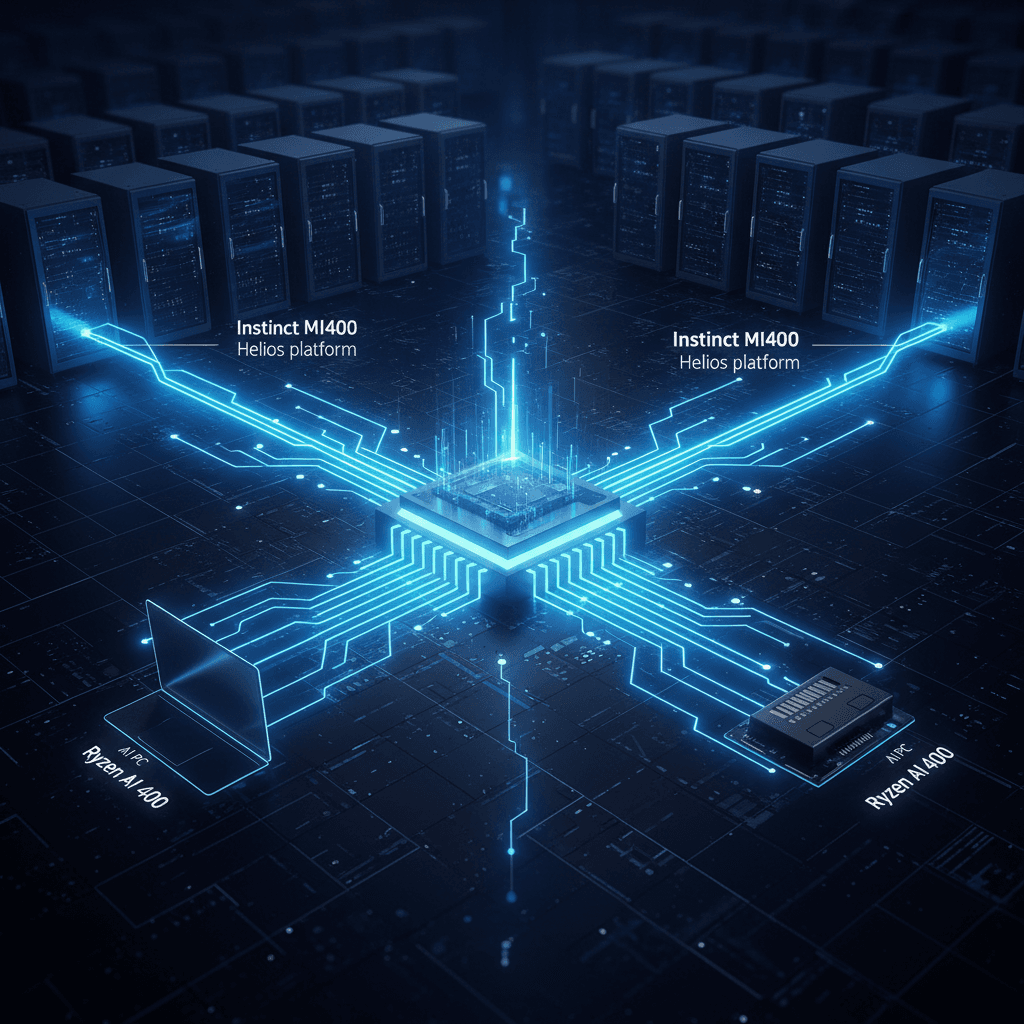

AMD’s commitment to the high-stakes data center market was cemented with the unveiling of the full portfolio of its Instinct MI400 Series accelerators and a detailed look at its "Helios" rack-scale platform, a blueprint for future yotta-scale AI infrastructure[1][2][3]. The new Instinct MI400 family, which includes the MI430X, MI440X, and the MI455X, is a direct response to the escalating demand for massive computational power for large language model (LLM) training and high-volume inference workloads[3][4]. The cornerstone of this enterprise push is the Instinct MI440X GPU, which is specifically engineered for on-premises AI deployments, allowing enterprises dealing with regulated data or latency-sensitive inference to keep their AI workloads local[2]. This focus on on-premise solutions is a strategic move to address the pragmatic needs of IT leaders who are becoming more cautious about current high-end AI hardware prices and shorter depreciation cycles[2].

The ultimate expression of the company's data center ambition is the Helios rack-scale platform, which integrates the new MI455X accelerators with AMD EPYC “Venice” CPUs and Pensando “Vulcano” AI NICs for scale-out networking[2][3]. The Helios system is designed to deliver up to 2.9 FP4 exaFLOPS for AI inference in a single rack, emphasizing a modular and open-standard design that can scale to connect thousands of accelerators[1][3]. This design philosophy leverages the open AMD ROCm software ecosystem, which the company touts as an increasingly viable alternative to proprietary platforms, seeking to attract developers and customers looking for a reliable second source to the market’s current dominant player[2]. Looking further ahead, AMD also previewed its next-generation Instinct MI500 series GPUs, which are slated for a 2027 launch and are projected to offer up to a 1,000x increase in AI performance compared to the prior MI300X generation, built on its CDNA 6 architecture and a 2-nanometer manufacturing process[2][5]. This ambitious roadmap signals AMD's sustained commitment to close the performance gap in the high-end training market[6][7].

On the consumer front, AMD launched the Ryzen AI 400 Series and Ryzen AI PRO 400 Series processors, primarily targeting the mobile laptop market and solidifying the company's position in the rapidly expanding "AI PC" category[8][9]. This new lineup, which is a mid-cycle refresh, is designed to make AI capability a standard feature across the product stack rather than a premium add-on[10][11]. The flagship chip, the Ryzen AI 9 HX 475, features 12 Zen 5 CPU cores, RDNA 3.5 graphics, and a Neural Processing Unit (NPU) built on the XDNA 2 architecture capable of delivering up to 60 Trillions of Operations Per Second (TOPS)[12][8][13]. This 60 TOPS specification is a critical benchmark, as it aligns with emerging industry standards, notably for Microsoft’s Copilot+ PC requirements, ensuring that laptops powered by these chips can handle increasingly complex on-device AI workloads[8][9]. The company claims significant performance advantages for these new chips, citing up to 1.3 times faster multitasking and 1.7 times faster content creation compared to previous generations or rivals[8][13]. Systems from a wide range of major Original Equipment Manufacturers (OEMs), including Acer, ASUS, Dell, HP, and Lenovo, are expected to ship with the Ryzen AI 400 series processors beginning in the first quarter[8][14].

Beyond the mainstream consumer chips, AMD also introduced new, specialized additions to its portfolio aimed at developers and edge computing[11]. The Ryzen AI Max+ 392 and 388 processors join the lineup, catering to professional creators and AI developers by featuring a GPU-heavy configuration with up to 40 RDNA 3.5 GPU compute units and support for up to 128GB of unified memory[15][13][14]. This configuration is specifically engineered for local development of large models and intensive content creation tasks, with AMD claiming up to 1.4 times better AI performance than a comparable rival's top-tier chip[14]. Extending its reach into industrial and automotive sectors, AMD unveiled the Ryzen AI Embedded processor portfolio, including the P100 and X100 Series[11][1]. These embedded processors integrate the same Zen 5 CPUs, RDNA 3.5 graphics, and XDNA 2 NPUs into a unified chip designed for robust edge deployments, such as autonomous systems, digital cockpits, and industrial automation[11][9]. This unified approach allows AI models developed on the embedded platform to be ported to the company's Versal AI Edge platforms, facilitating a scalable development ecosystem from cloud to client to edge[11].

In sum, the CES 2026 announcements demonstrate a cohesive, multi-front strategy by AMD. The company is simultaneously pushing the boundaries of large-scale AI infrastructure with the Instinct MI400 series and the Helios platform, while aggressively standardizing AI capabilities on consumer devices with the Ryzen AI 400 series. This dual-pronged effort positions AMD not merely as a component supplier but as a foundational compute provider for the entire AI lifecycle, aiming to capture market share from the most powerful cloud data centers down to the smallest embedded industrial systems[1]. The success of this strategy hinges on the real-world performance validation of the new client chips and the continued maturation and adoption of the open ROCm software ecosystem in the enterprise space[2].

Sources

[1]

[6]

[8]

[9]

[10]

[11]

[13]

[14]

[15]