Altman Claims ChatGPT Sips Water, Challenges AI's Thirsty Image

Altman offers a tiny estimate for ChatGPT's water use, fueling skepticism amidst growing concerns over AI's substantial global footprint.

June 11, 2025

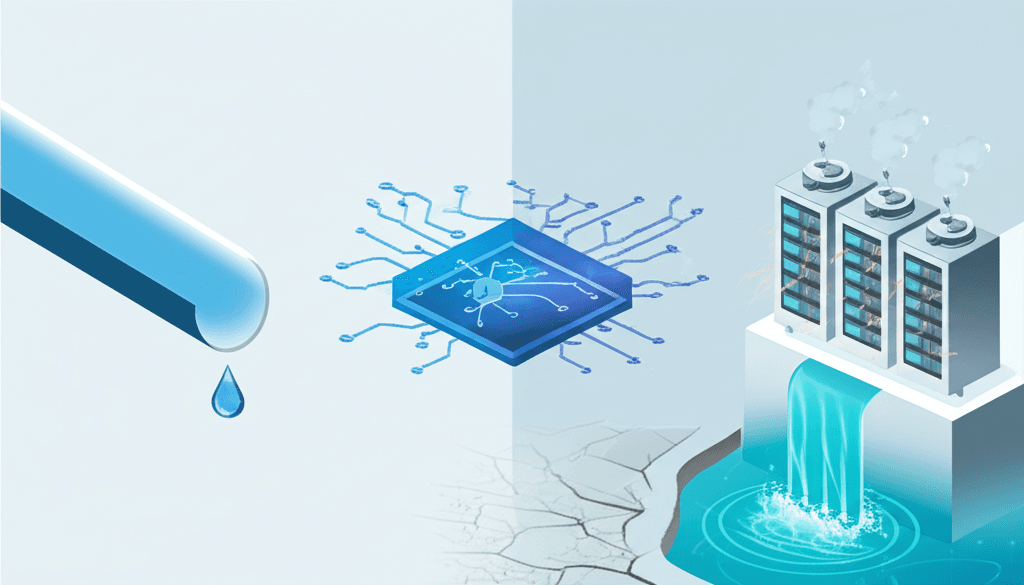

The burgeoning field of artificial intelligence is facing increasing scrutiny over its environmental footprint, particularly its substantial water consumption for cooling the powerful data centers that underpin these technologies.[1][2][3][4] Amidst this growing concern, OpenAI CEO Sam Altman recently offered a specific, and notably small, estimate for the water usage of its flagship product, ChatGPT. According to Altman, an average ChatGPT query consumes approximately 0.000085 gallons of water, which he contextualized as "roughly one fifteenth of a teaspoon."[5][6][7][8][9] This figure, shared in a blog post, presents a stark contrast to some independent analyses and highlights the complexities in quantifying the true environmental cost of large language models.[5][7]

Altman's statement positions ChatGPT's water usage as remarkably efficient, especially when compared to broader estimates of AI's thirst.[5][6][7] If accurate, this low per-query consumption could alleviate some concerns about the scalability of AI in a world facing increasing water scarcity.[10][11] However, the claim has been met with both interest and a degree of skepticism, as it is significantly lower than some previous projections for AI water usage.[12][13][14][15][16] The initial information provided suggested Altman's estimate is nearly one-tenth of what some analysts had projected, underscoring the ongoing debate and the need for greater transparency in how such figures are calculated and reported by AI companies.[17][18] The lack of a specified source or methodology behind Altman's number in his blog post further complicates independent verification.[5][7]

The core reason for AI's significant water footprint lies in the immense computational power required to train and run large language models.[1][18][2] This power generates substantial heat within data centers, necessitating extensive cooling systems to maintain optimal operating temperatures and prevent hardware failure.[1][12][2][3] These cooling processes often rely on water, either through evaporative cooling towers that release water into the atmosphere, or through chilled water circuits.[17][11][3] Beyond this direct (Scope 1) water use for cooling servers, there is also indirect (Scope 2) water consumption associated with the electricity generation needed to power these energy-hungry facilities.[17][11] The manufacturing of the specialized hardware, such as GPUs, also carries an "embodied water" footprint (Scope 3).[17][3] Tech giants like Google and Microsoft have reported significant increases in their overall water consumption, partly driven by the growth in AI.[17][19][12][2][14] For instance, Microsoft's water consumption jumped 34% from 2021 to 2022, while Google saw a 20% increase in the same period, figures attributed in part to AI development.[17][12][2][14] Globally, AI's annual water withdrawal is projected to potentially reach between 4.2 and 6.6 billion cubic meters by 2027.[17][10][11]

Various researchers have attempted to quantify the water footprint of AI interactions. Some estimates have suggested that a series of 5 to 50 prompts with ChatGPT could consume around 500 milliliters (about 16 ounces) of water, depending on the server location and other factors.[17][13][18][14][20][15][16] Other analyses, such as one by researchers from the University of Colorado Riverside and the University of Texas Arlington, indicated that a 100-word ChatGPT-4 response might consume 519 milliliters of water.[21] These figures encompass both direct cooling water and the water used in electricity generation. One study suggested that training GPT-3 alone might have consumed 700,000 liters of freshwater.[22][14][16] Another perspective refines this, suggesting that if considering only the direct water for cooling within the data center (excluding electricity generation water), the figure is closer to 500 milliliters per 300 queries.[23] These varying estimates underscore the challenges in establishing a standardized measure for AI's water footprint, which can be influenced by data center efficiency, cooling technologies, ambient climate conditions, and the energy mix of the local grid.[17][24]

The implications of AI's water consumption are significant, particularly as the technology becomes more pervasive and as concerns about global water scarcity and climate change intensify.[10][11][13][2][3] Data centers are already substantial water consumers, with large hyperscale facilities potentially using millions of liters per day.[11][25] In some water-stressed regions, the establishment or expansion of data centers has sparked local concerns about competition for freshwater resources.[2][20][26][3] The AI industry is not unaware of these challenges. Companies like Microsoft and Google have made commitments to become "water positive" by 2030, meaning they aim to replenish more water than they consume through various conservation and restoration projects.[10][27] Microsoft has also announced new data center designs that aim for zero water evaporation for cooling by using closed-loop systems and chip-level cooling, which could save an estimated 125 million liters of water annually per facility.[27][25][28][29][30] These technological advancements, alongside efforts to improve Water Usage Effectiveness (WUE) – a metric of water used per unit of IT energy – represent steps toward mitigating AI's water impact.[25][28][29]

Sam Altman's specific claim of one-fifteenth of a teaspoon per ChatGPT query, if substantiated and representative of typical, real-world usage across diverse conditions, would suggest significant strides in efficiency by OpenAI. However, without transparent, verifiable data and standardized reporting across the industry, it remains difficult to fully assess such claims against the broader environmental impact.[18] The discrepancy between Altman's figure and other academic and analytical estimates highlights a critical need for more open and detailed accounting of resource consumption by AI models. As AI continues its rapid development and deployment, understanding and managing its environmental footprint, including its thirst for water, will be crucial for ensuring its sustainable integration into society. The push for greater transparency in reporting AI's water usage, similar to what is becoming more common for carbon footprints, is growing.[17][24][18][26] This would allow for more informed decision-making by developers, policymakers, and consumers alike, and help drive innovation towards truly resource-efficient artificial intelligence.

Sources

[1]

[2]

[3]

[4]

[5]

[6]

[10]

[11]

[13]

[14]

[15]

[16]

[17]

[19]

[22]

[23]

[24]

[27]

[28]

[29]

[30]