Alibaba's Qwen AI Turns Flat Images into Editable Photoshop Layers Automatically

Alibaba's AI creates structured, Photoshop-like layer compositions, promising inherent editability and infinite recursive control.

December 21, 2025

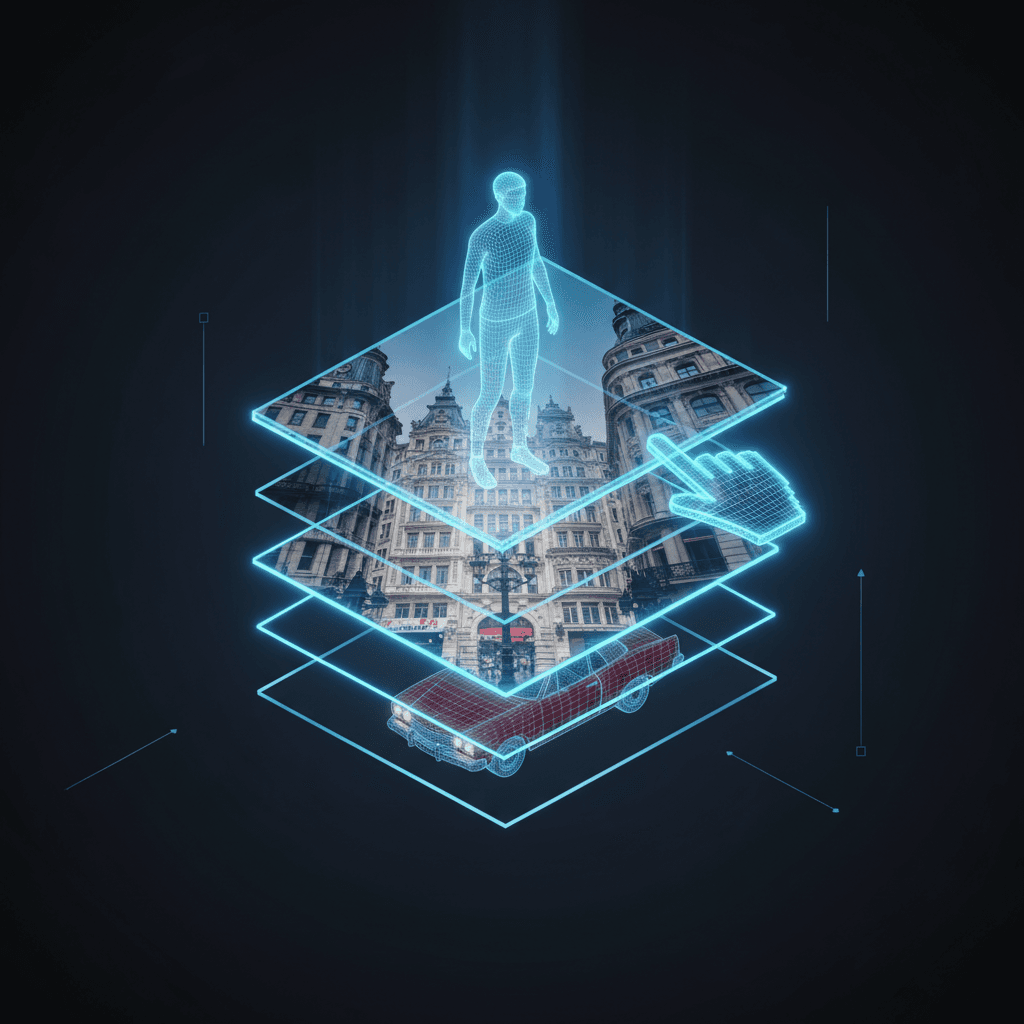

The release of Qwen-Image-Layered by Alibaba's Qwen AI unit represents a significant paradigm shift in the realm of image generation and editing, introducing a capability that moves beyond simple image manipulation to automatically creating structured, multi-layer compositions akin to a project file in professional software like Adobe Photoshop. This new generative model is engineered to decompose a single, flat image into multiple independent layers, each featuring a transparent background, or RGBA channels, thereby granting users an unprecedented degree of automated control and "inherent editability" over visual content. By physically isolating semantic components—such as a foreground subject, background, or text—onto their own distinct layers, the model fundamentally alters the workflow for graphic designers, digital artists, and content creators, promising to eliminate hours of tedious, manual masking and segmentation work.[1][2]

The core innovation of Qwen-Image-Layered lies in its ability to translate the abstract concept of semantic components within an image into concrete, independently manipulable layers. Unlike traditional segmentation tools, which only generate masks or outlines for objects, this diffusion model generates a complete layer stack, where each layer contains both the color information (RGB) and an alpha channel for transparency (A)[3][1]. This layered representation ensures that any subsequent editing operation—like resizing an object, repositioning an element, or changing its color—is applied exclusively to the target layer, maintaining high fidelity and consistency across the rest of the image without unintentional side effects[2]. Crucially, the model is also designed to intelligently handle occlusion, meaning it can fill in the parts of the background that were previously hidden by the foreground object, making object removal or movement a seamless process[1][2].

One of the most remarkable technical features is the model's support for flexible and iterative decomposition, which distinguishes it from fixed-layer systems. Qwen-Image-Layered does not limit the user to a set number of layers; an image can be decomposed into as few as three or as many as eight or more layers, with the count adjusting based on the scene's complexity and the user's specific requirements[3][2]. Furthermore, the model allows for recursive decomposition, meaning any generated layer can be selected and further decomposed into its own sub-layers, enabling infinite levels of refinement and granular control over complex scenes[1][2]. This capability facilitates advanced, non-destructive editing workflows that were previously only possible through expert manual effort in vector or raster editing software. For instance, a user can decompose a complex scene into its main elements, then take a single complex element—like a crowded bookshelf—and recursively decompose it to edit individual books or objects on that shelf.[1][2]

The introduction of Qwen-Image-Layered solidifies Alibaba's ambitious strategy in the global artificial intelligence landscape, positioning the company as a leader in open-source generative AI, particularly in the multimodal domain. Licensed under the permissive Apache 2.0 license and available on platforms like Hugging Face and ModelScope, the open-source nature of the model democratizes high-fidelity, layer-based editing, making this sophisticated capability accessible to a wider community of developers and users without the cost of proprietary professional software[1][4][5]. This move directly challenges established software giants like Adobe, whose market dominance in creative tools is built upon the very concept of layer-based editing. The model is an extension of the previously released Qwen-Image-Edit, which itself built upon the 20-billion-parameter Qwen-Image foundation model known for its breakthroughs in text rendering and precise editing capabilities[6][7]. The ability to integrate the layered output with an existing editing model, such as using Qwen-Image-Edit to replace or modify content within a specific layer, creates a powerful, end-to-end AI-powered editing pipeline.[4][7]

The immediate and long-term implications of this technology for the creative and AI industries are profound. For creators, it promises to drastically speed up common workflows, such as background replacement, object manipulation, and compositing, by automating the most time-consuming steps of selection and masking[1]. For the broader AI industry, Qwen-Image-Layered signals a critical step in the evolution of image models, moving beyond simple image generation and text-to-image synthesis toward creating truly editable, structured output[5]. This advance mirrors the evolution seen in large language models, where the ability to edit and refine output code or text is highly valued. By injecting a similar level of editability into visual media, the model sets a new standard for what is expected from state-of-the-art diffusion models[5]. It suggests a future where AI does not just generate static images, but creates fully pliable visual assets, further blurring the line between AI-generated content and traditionally designed professional graphics, and cementing the concept of structured editability as a key metric for next-generation generative AI tools.[2][5]