AI Gets Self-Healing Brain: Researchers Fix Flaw Causing Digital Aphasia.

Diagnosing AI's "Conduction Aphasia," the UniCorn framework allows models to self-heal and master accurate content generation.

January 11, 2026

A groundbreaking new study from Chinese researchers has shed light on a debilitating flaw in modern unified multimodal artificial intelligence models, formalizing the observed deficiency as "Conduction Aphasia" and introducing a novel solution for self-healing. This condition, which the researchers identify as a disconnect between a model's understanding and its ability to generate high-fidelity content, highlights a fundamental bottleneck in the architecture of systems that process both language and imagery. The proposed framework, named UniCorn, or "Towards Self-Improving Unified Multimodal Models through Self-Generated Supervision," represents a significant step forward by teaching AI systems to autonomously recognize and rectify their own weaknesses, eliminating the need for extensive external data or teacher supervision[1][2][3].

The concept of "Conduction Aphasia" in AI is a direct, deliberate parallel to the human neurological condition where an individual maintains strong language comprehension and speech motor skills but struggles with accurately repeating words or translating internal thoughts into coherent speech. In the context of unified multimodal models (UMMs)—systems designed to handle both cross-modal comprehension and text-to-image synthesis—the phenomenon manifests as a failure to translate superior internal knowledge into faithful and controllable generative outputs[2][3]. Models afflicted by this digital aphasia demonstrate a clear ability to interpret and critique a visual scene or a complex text prompt, yet they often synthesize an image that fundamentally misunderstands a key relational or fine-grained detail. A classic example documented by the researchers involves a prompt requesting a specific spatial arrangement, such as "a beach on the left and a city on the right," where the model correctly grasps the individual concepts but mistakenly flips the layout in the generated image, thereby losing the critical spatial coherence[4]. This deficit extends to other demanding tasks, notably the notoriously challenging accurate rendering of text within a generated image, where models routinely fail to spell correctly or place words appropriately[4]. The researchers argue that while UMMs have achieved remarkable success in their 'comprehension' capabilities, a significant and frustrating gap persists in their 'generation' capacity, a discrepancy UniCorn is designed to address[3].

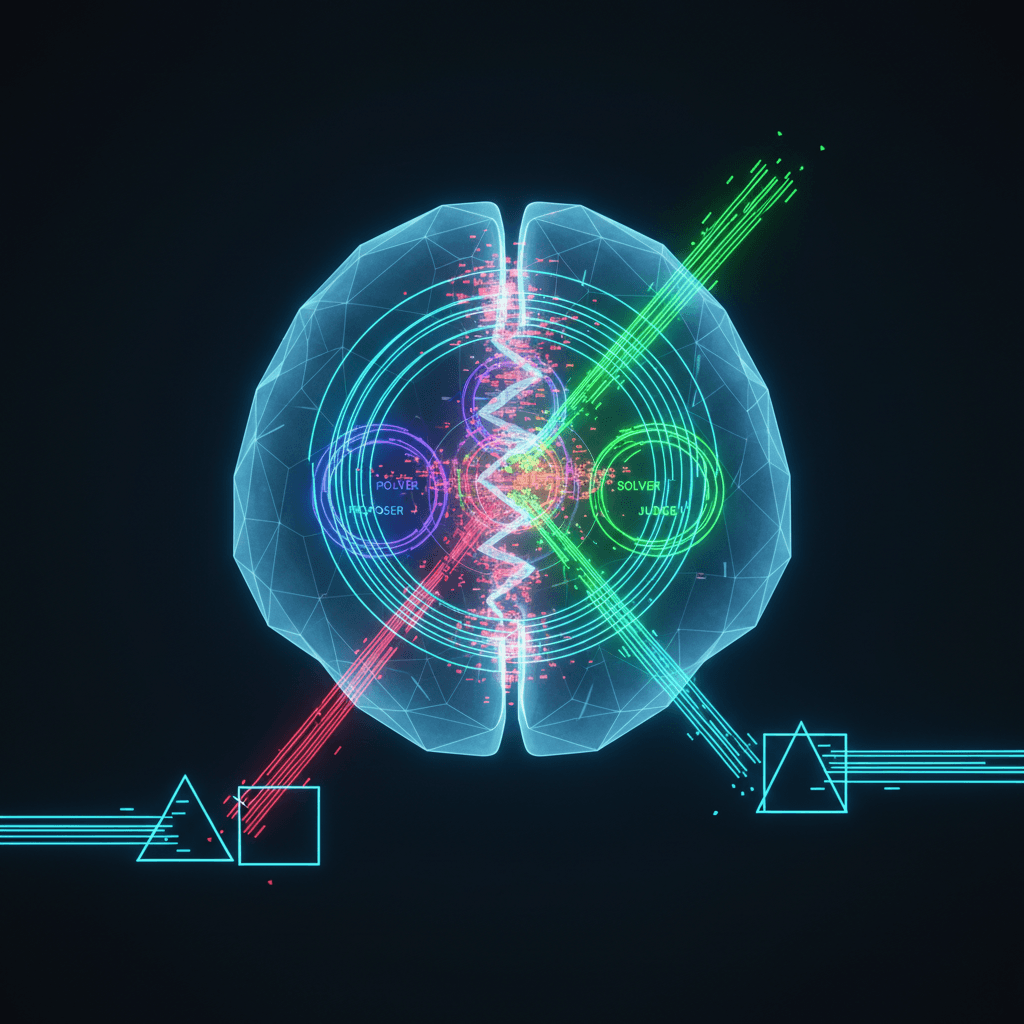

UniCorn’s architecture introduces a powerful and innovative solution through a fully self-contained learning paradigm that mimics structured cognitive collaboration. The framework requires no external data or supplementary teacher models, instead partitioning a single unified multimodal model into a sophisticated, multi-agent system operating entirely within its own parameter space[2][3]. This self-improvement process is executed through three distinct internal roles: the Proposer, the Solver, and the Judge[1][2]. The Proposer initiates the cycle by generating diverse and expansive prompts, essentially acting as an internal drill instructor creating challenging test cases[2]. Following this, the Solver assumes the task of synthesizing multiple image candidates corresponding to the generated prompt[1]. Critically, the process culminates with the Judge, a role leveraging the model’s superior comprehension capabilities to evaluate the quality and fidelity of the Solver's output, providing evaluative rewards and feedback based on its ability to accurately assess the generated image against the original, complex prompt[2][3]. This loop simulates a continuous process of self-critique and refinement, transforming the model's latent interpretive capability into an explicit, internal training signal[2].

This innovative multi-agent sampling process generates rich, raw interaction data, which is then distilled into actionable intelligence through a mechanism called Cognitive Pattern Reconstruction. This step converts the voluminous raw outputs into structured training signals, explicitly mapping the latent understanding of the Judge into supervision for the Solver's generative tasks[1][2]. These structured signals include descriptive captions, evaluative judgments, and reflective feedback patterns, which collectively serve as the self-generated data required to autonomously narrow the comprehension-generation gap[2]. To rigorously validate the framework's effectiveness, the team introduced UniCycle, a novel cycle-consistency benchmark based on a Text $\rightarrow$ Image $\rightarrow$ Text reconstruction loop, ensuring that the generated images not only looked good but also accurately preserved the key semantic and spatial details of the original prompt[3].

The results of the UniCorn framework are demonstrably significant, showcasing its efficacy in restoring multimodal coherence. Applied across six major image generation benchmarks, the framework achieved comprehensive and substantial improvements over the baseline models[3]. Notably, UniCorn achieved state-of-the-art (SOTA) performance on key benchmarks such as TIIF (73.8), DPG (86.8), and CompBench (88.5)[3]. Furthermore, it delivered substantial performance gains, including an increase of +5.0 on the WISE benchmark and +6.5 on OneIG[3]. Perhaps the most critical achievement lies in the models’ ability to overcome the text rendering failure, with UniCorn delivering a massive 22-point jump in accurate text generation on the 1IG benchmark, a feat that directly addresses one of the most persistent, visual symptoms of the so-called Conduction Aphasia[4].

The implications of this research are profound for the future of the AI industry. By demonstrating that a model can successfully self-correct and leverage its own internal critique for refinement, UniCorn introduces a scalable and powerful future for self-supervised multimodal intelligence[4]. It shifts the paradigm of training by proving that high-quality generative models can be achieved without the perpetual need for massive external datasets or costly human-labeled supervision, thus reducing the dependency on ever-growing computational resources[2]. The framework's ability to bridge the fundamental disparity between comprehension and generation in UMMs through a principled, neurologically-inspired diagnosis offers a promising path toward building more robust, reliable, and human-like cognitive systems that can truly translate deep understanding into accurate and controllable action[3]. This breakthrough solidifies the importance of self-contained, internal feedback mechanisms as a crucial component for achieving the next generation of unified artificial general intelligence.