AI-Generated Fakes Cripple St. Louis Monkey Search, Draining Emergency Resources.

A simple animal recovery mission exposes how easy AI tools can undermine critical public safety efforts.

January 13, 2026

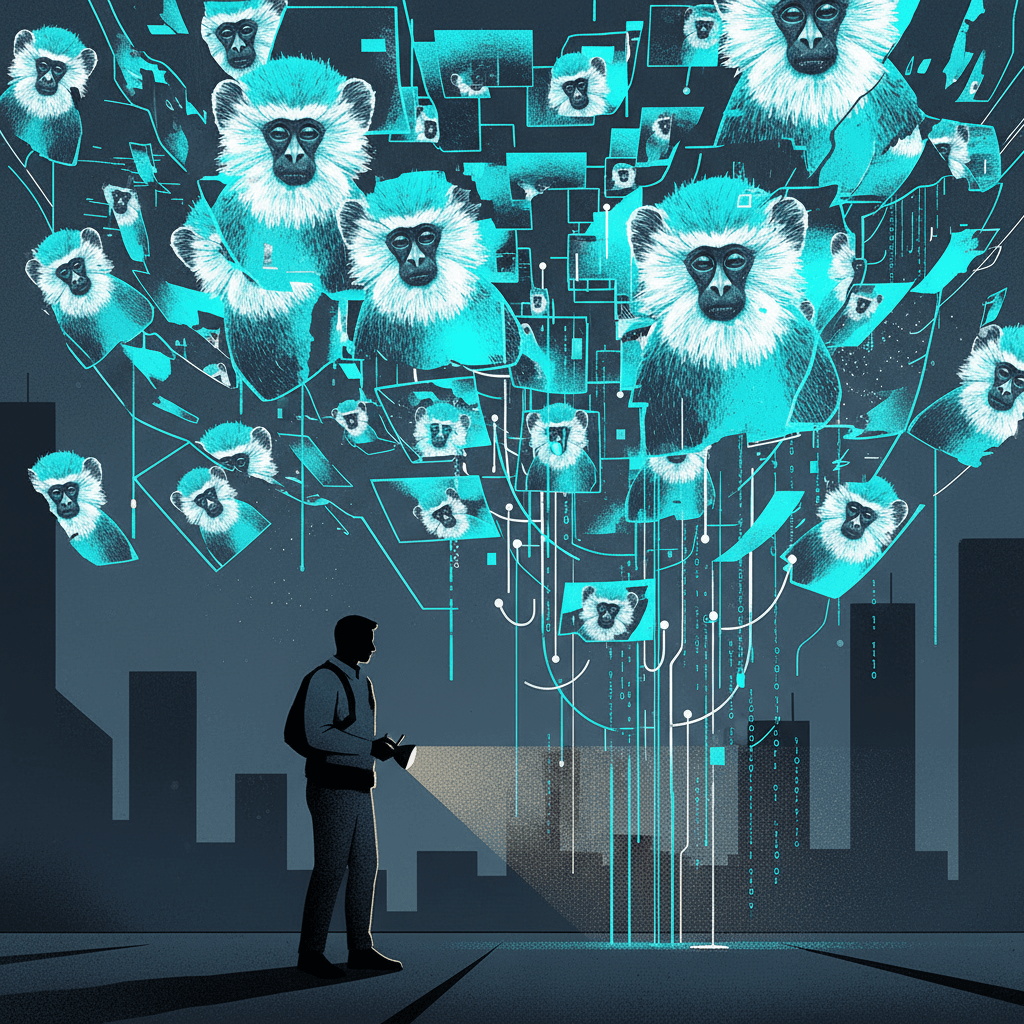

The elusive search for a group of vervet monkeys on the loose in St. Louis has unexpectedly become a microcosm of the modern information crisis, with local authorities struggling to separate credible public sightings from a torrent of convincing, yet entirely fabricated, images generated by artificial intelligence. What began as an unusual animal escape has quickly morphed into a logistical and communications nightmare for the city's health and animal control departments, highlighting a dangerous new frontier in the battle against digital misinformation. The incident underscores the profound, real-world implications of readily accessible generative AI technology, which is now actively impeding public safety efforts and draining crucial resources.

Animal control officers, working in collaboration with primate experts from the St. Louis Zoo, are navigating the terrain of North City, particularly the area surrounding O'Fallon Park, where the vervet monkeys were first spotted. The situation remains fluid and ambiguous, a challenge in itself even before the factor of digital fakery. Officials have been unable to confirm the exact number of the escaped primates, though reports suggest there could be as many as four animals still at large. Making the search more difficult is the unknown origin of the monkeys, as well as the fact that private ownership of these animals, which are native to sub-Saharan Africa, is prohibited within the city, making it highly unlikely the owner will come forward to claim them and provide context for the escape. Compounding the urgency, vervet monkeys are described as intelligent and social, but officials have warned that they can become unpredictable or even aggressive under stress, posing a potential, albeit minor, public safety risk that demands their swift and accurate capture[1][2][3].

The primary obstacle to the search is the rapid and widespread circulation of AI-generated imagery across social media platforms like X, formerly known as Twitter, according to local officials. St. Louis Department of Health spokesperson Willie Springer stated that the escape has sparked "rumour after rumour," creating significant difficulty in discerning "what's genuine and what's not"[4][5]. These fake posts are not merely minor distortions of facts; they are high-quality, believable fabrications that directly interfere with the process of establishing an accurate search perimeter and responding to actionable intelligence. One verified sighting by a police officer is a small needle of truth in an enormous haystack of digital noise, which includes images falsely claiming the monkeys have already been captured, which officials have had to publicly debunk[3][1].

The nature of the AI hoaxes demonstrates the dual-edged sword of current generative technology. Many of the fabricated images, reportedly created by residents "just having fun," depict the primates in highly comical and unrealistic scenarios, such as joining a local street gang, drinking from malt liquor bottles, or even taking a separately escaped goat "hostage"[6][7][8]. While the intent behind these jokes may seem harmless, their visual realism, often indistinguishable from authentic photography to the untrained eye, forces officials to dedicate time and resources to verify every substantial report. This process of sifting through digital "slop," as one commentator termed it, turns the serious effort of tracking and recovering potentially dangerous animals into a resource-intensive "wild monkey chase"[6][8]. The circulation of deepfakes, even when intended as satire, creates a significant data integrity crisis for emergency responders, delaying real action and potentially putting both the animals and the public at risk.

This local St. Louis incident serves as a potent and tangible case study for the wider AI industry, shifting the conversation around generative media from abstract ethical debates to concrete public safety concerns. Historically, deepfake worries centered on political figures or national security, yet this event demonstrates how accessible AI image tools can immediately and negatively impact localized, on-the-ground crisis management. The ease with which a critical public information environment can be polluted showcases a systemic vulnerability. The lack of reliable provenance for images shared online means that citizens trying to help, or those just seeking news, can easily be misled by the hoaxes, further amplifying false leads and adding to the chaos[9][7]. The challenge facing tech companies now is not just mitigating the creation of malicious deepfakes but also finding solutions, such as robust watermarking or metadata verification standards, to preserve the integrity of visual evidence in everyday emergency and journalistic contexts. Without such mechanisms, every public crisis—from an escaped animal to a natural disaster—will be fought on two fronts: the physical world and the digital battlefield of synthetic media.

As the search for the vervet monkeys continues, local officials are imploring the public to rely only on official channels for reporting genuine sightings, a plea that is itself a concession to the power of the AI-driven rumour mill. The St. Louis monkey chase, therefore, stands as a clear and unambiguous warning to the global AI community and emergency management services: the technology designed to generate convincing illusions has now become a direct, measurable impediment to essential civic functions. The incident provides a valuable, if alarming, data point on the escalating erosion of visual trust, illustrating that the generative AI revolution has irrevocably changed the landscape of emergency response, turning a straightforward animal recovery mission into a complex exercise in digital forensics.