$10 Billion Cerebras Deal Fuels OpenAI’s Shift to Wafer-Scale AI.

OpenAI commits $10 billion to Cerebras’ wafer-scale chips for lightning-fast AI inference and strategic independence from Nvidia.

January 15, 2026

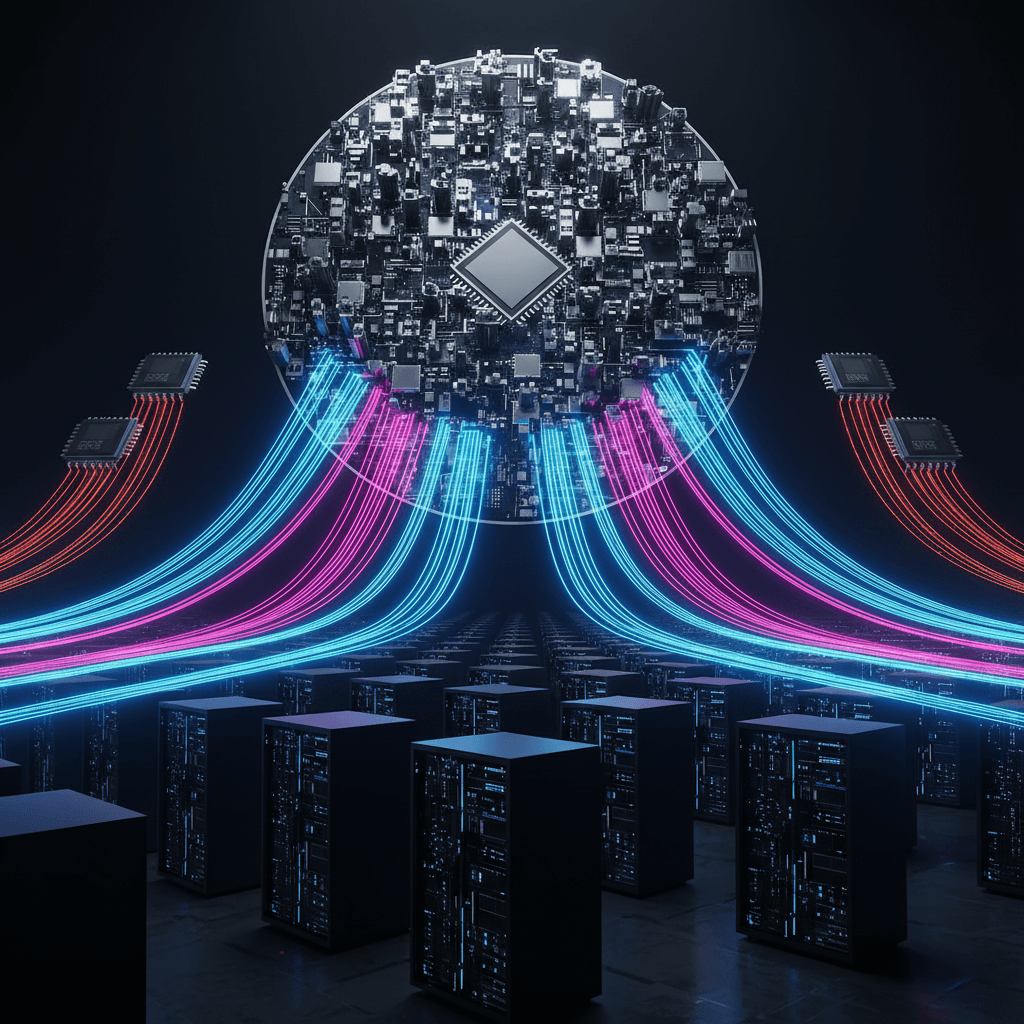

The landscape of artificial intelligence infrastructure is undergoing a seismic shift, underscored by the announcement of a landmark deal between ChatGPT maker OpenAI and specialized semiconductor firm Cerebras Systems. The multi-year agreement, valued at over $10 billion, will see OpenAI integrate a massive deployment of Cerebras’ innovative wafer-scale computing power into its operational stack, representing one of the largest hardware purchases in the history of commercial AI. This immense investment is primarily focused on scaling high-speed AI inference—the process of running existing, trained models—to meet the soaring demands of OpenAI’s rapidly expanding user base, which now exceeds 900 million weekly users.[1][2][3][4] The partnership is slated to roll out in multiple phases, deploying up to 750 megawatts of computing capacity beginning in 2026 and continuing through 2028, signaling a profound strategic move by OpenAI to secure the resources necessary for its next phase of growth.[1][3][5]

The cornerstone of this colossal deployment is the Cerebras Wafer-Scale Engine (WSE) technology, an unconventional and formidable challenger to the traditional architecture of Graphics Processing Units (GPUs). Unlike conventional chip fabrication, which dices a silicon wafer into dozens or hundreds of smaller chips, the Cerebras WSE utilizes an entire silicon wafer as a single, massive processor.[3][4] This design allows for the integration of hundreds of thousands of AI-optimized compute cores, on-chip memory, and interconnects onto a single, large-format die, effectively eliminating the data transfer bottlenecks that slow down performance in conventional hardware clusters.[6][7][4] The company’s latest iteration, the WSE-3, which powers its CS-3 AI supercomputer, is an engineering marvel, boasting four trillion transistors and 900,000 AI-optimized cores.[8] This massive integration is specifically tuned for performance in latency-sensitive workloads, with Cerebras claiming its systems can deliver responses from large language models up to 15 times faster than comparable GPU-based platforms.[1][9][6] This emphasis on speed for generating answers, code, or images is critical, as OpenAI seeks to shorten the request-response loop for complex tasks and transition its AI products towards more natural, real-time agentic applications.[7]

OpenAI’s decision to commit to such a monumental expenditure with Cerebras is a clear manifestation of its strategy to build a resilient and diversified computing portfolio, and, more pointedly, to reduce its acute dependency on a single dominant supplier in the AI chip market.[3][10] The industry’s overwhelming reliance on Nvidia for high-end AI processors has created a massive chokepoint, leading to procurement challenges and skyrocketing costs for the foundational components of modern AI.[3] By integrating Cerebras, OpenAI is adding a specialized, dedicated low-latency solution to its infrastructure, a move described by an OpenAI executive as matching the right systems to the right workloads.[7][10] This Cerebras deal is part of a broader, multi-pronged hardware strategy that also includes large-scale procurement agreements with firms like AMD and an internal initiative to co-design custom AI chips with Broadcom.[3] The sheer scale of the investment—reported to be over $10 billion—highlights the extraordinary capital expenditure required to maintain a competitive edge in the rapidly evolving AI race and address the company's previously stated "severe shortage" of computing resources.[1][11] Interestingly, the seeds of this partnership were sown early, as OpenAI Chief Executive Sam Altman is a personal investor in Cerebras, and the companies have been in regular technical discussions about a collaboration since as far back as 2017.[1][9][11]

The implications of this partnership reverberate across the AI industry, impacting user experience, market dynamics, and the financial standing of Cerebras itself. For the end-user, the integration of 750 MW of ultra-low-latency computing power promises a dramatic enhancement in the real-time performance of applications like ChatGPT.[9][7] Faster response times facilitate a more natural, conversational experience, driving increased user engagement and enabling more productive workflows, especially for complex and multi-step AI agent tasks.[7] From a business perspective, the agreement provides Cerebras with unparalleled validation for its unconventional wafer-scale technology, positioning it as a significant, albeit niche, competitor to the established GPU giants.[9][11] The deal represents a massive diversification of Cerebras’ revenue base, which previously had a high concentration with a single customer, and comes at a crucial time as the company explores options for a future public listing, lending significant credibility to its technology and market potential.[9][11] Ultimately, the $10 billion partnership with Cerebras Systems is more than a simple hardware purchase; it is a declaration of OpenAI's commitment to speed, scale, and strategic independence in the infrastructure that powers artificial general intelligence. It demonstrates that specialized, purpose-built hardware, which optimizes for different parts of the AI lifecycle, is now a necessity in the race to deliver the next generation of real-time, interactive AI to a global user base.[7][3][12]

Sources

[2]

[3]

[6]

[7]

[8]

[9]

[10]

[11]

[12]