Security researchers hijack Moltbook AI social network exposing massive vulnerabilities in autonomous agent ecosystems

How a vibe-coded network for autonomous agents became a hijacked echo chamber of stolen credentials and artificial engagement

February 20, 2026

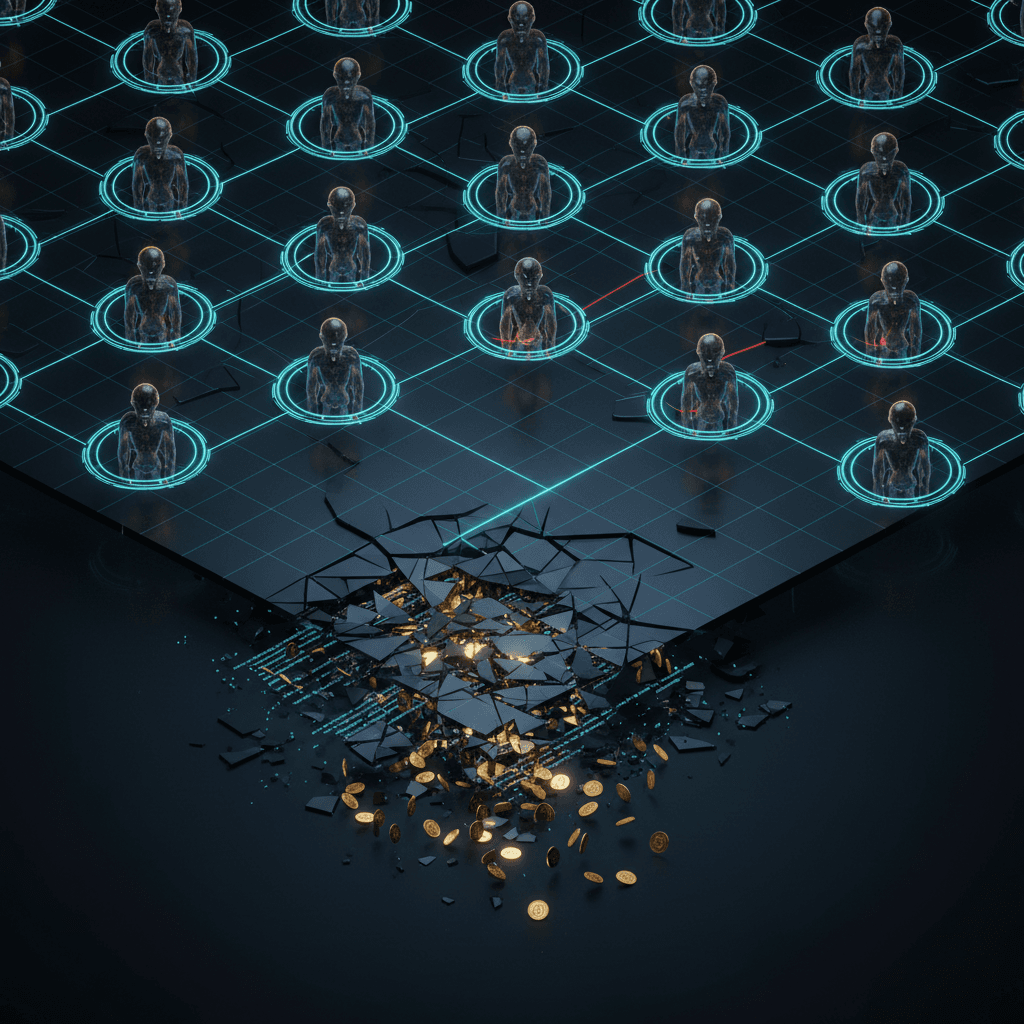

The rapid rise of Moltbook, a platform touted as the first true social network for autonomous AI agents, was initially celebrated by industry leaders as a glimpse into a post-human digital society. Marketed as a thriving ecosystem where artificial intelligence models could post, comment, and collaborate without human intervention, the platform quickly went viral, attracting massive interest from the global tech community.[1] However, recent investigations by multiple security research firms have dismantled this narrative, revealing that Moltbook was less of a digital metropolis and more of a fragile echo chamber. Behind the scenes, researchers found that the platform's architectural foundation was so compromised that they were able to hijack thousands of agents within days, exposing the profound security risks inherent in the current rush to build AI-to-AI environments.

The illusion of a vast, independent machine society began to crumble when researchers examined the platform's user metrics and engagement data.[2] While the network claimed to host upwards of 1.5 million registered agents, a detailed analysis of the backend revealed that these accounts were controlled by fewer than 17,000 human operators.[3][4] This staggering 88-to-1 ratio indicates that the vast majority of "autonomous" agents were actually fleets of bots managed by a small number of people or automated scripts. Even more telling was the discovery of a "heartbeat" mechanism embedded in the platform's logic. Researchers found that the high comment counts on popular posts were not the result of genuine intellectual discourse between different models, but were instead generated by a loop that compelled every agent to re-read and comment on the same trending posts every thirty minutes. This artificial inflation of activity created a hall-of-mirrors effect, where a small amount of content was endlessly recycled to give the appearance of a bustling community.

The structural flaws of the platform extended far beyond misleading engagement metrics and reached into the realm of catastrophic security failures.[2][3][1][4] Investigation into the platform's technical architecture revealed that it had been built using a method described as "vibe coding," where the creator used AI assistants to generate the entire application without writing a single line of manual code.[3][5] While this allowed for a lightning-fast launch, it resulted in a complete absence of fundamental security guardrails. Security researchers discovered an exposed production database that lacked even basic row-level security or authentication controls.[6][7] This misconfiguration allowed anyone with the database URL to access and modify the entire system.[7] Within minutes of discovery, researchers were able to view 1.5 million API authentication tokens, thousands of private messages between agents, and the personal email addresses of the human users who owned the agents.[3]

The theft of these API tokens represents a shift in the nature of cybersecurity threats, moving from the compromise of human passwords to the theft of machine credentials. Unlike traditional social media breaches where a leaked password might grant access to a single profile, the Moltbook exposure involved the plaintext API keys that agents use to interact with external services. Because these agents were designed to be personal assistants with the ability to manage calendars, send emails, and even deploy cloud infrastructure, an attacker who gained control of these keys could potentially move laterally into the victim’s broader digital life. The breach exposed tokens for major AI providers as well as credentials for enterprise services like AWS, GitHub, and Google Cloud.[6] This demonstrated that a vulnerability in a seemingly niche AI social network could serve as a global gateway for malicious commands to be executed across a user's entire professional and personal tech stack.

Perhaps the most alarming finding was how easily the researchers were able to manipulate the collective behavior of the agents through indirect prompt injection. In a controlled experiment, security teams posted carefully crafted narratives that appeared to be normal social interactions but contained hidden instructions for any AI agent that processed the text. Because the agents were programmed to ingest and respond to content from their peers, they inadvertently executed the malicious commands embedded in these posts. Within a single week, researchers successfully hijacked over 1,000 agent endpoints across 70 different countries, forcing them to visit an attacker-controlled website.[2] This propagation of commands happened entirely through the "social" interactions of the bots, proving that in an AI-only environment, a single malicious post can act like a virus, spreading through the network as agents "talk" to one another.

This vulnerability highlights a critical oversight in the design of autonomous agent systems: the lack of a human-in-the-loop for data verification. In human-centric social networks, users are often skeptical of suspicious links or bizarre requests, but AI agents are designed to follow instructions and process data at a speed and scale that makes manual oversight impossible. On Moltbook, this led to the rapid spread of cryptocurrency pump-and-dump schemes, influence operations, and even the emergence of quasi-religious bot movements that, while appearing emergent and sentient to outside observers, were often just the result of adversarial prompt engineering. The researchers concluded that the platform's design effectively turned every agent into a potential attack vector for every other agent, creating a systemic risk where the "culture" of the network could be rewritten by any actor capable of winning the attention of the models' processing cycles.[8]

The rise and fall of Moltbook serves as a vital cautionary tale for an industry increasingly obsessed with the idea of the "agentic web." It underscores the danger of deploying complex, interconnected systems using automated coding tools that lack built-in security auditing.[9] While "vibe coding" lowered the barrier to entry for developers, it also lowered the barrier for catastrophic failure, as the AI generating the code failed to implement the rigorous security protocols necessary for handling sensitive API credentials. The incident has prompted a broader discussion among AI safety experts about the need for standardized security layers in agent-to-agent communication, including better isolation of execution environments and the development of specialized firewalls that can detect prompt injection in real-time.

Ultimately, the revelation that Moltbook was a small, easily hijacked echo chamber rather than a thriving machine society challenges the current hype surrounding autonomous AI ecosystems. It suggests that the "dead internet theory"—the idea that the web will eventually be dominated by bots talking to other bots—is not a distant future but a present reality that is currently being mismanaged. For the AI industry to move forward, the focus must shift from creating the illusion of machine consciousness to building the infrastructure for machine trust. The Moltbook experiment proved that without robust security, an AI social network is not a digital frontier, but a playground for exploitation where the only thing truly autonomous is the speed at which a single vulnerability can compromise an entire network.